Why multiple-processes? Check this

table of latencies.

We can use the CPU while RAM, disk, and network access are

occurring.

Two types of parallelism:

Pseudo-parallelism: on one CPU, gives the appearance that

processes are running simultaneously.

Real parallelism: with multiple CPUs, processes

do run simultaneously. (But not all of them,

usually: e.g., four CPUs on my Mac, but 392 processes.)

The illusion of parallelism is produced by continually

switching the CPU between processes. These switches are

expensive.

There is no real difference conceptually between what a

single CPU running 100 processes does been a four core CPU

running 400 processes. So here we will just look at the

single CPU situation.

It is important to know that from the point of view of a

single program, multiprocessing renders the behavior of the

program nondeterministic. The program cannot be certain how

frequently it will get the CPU. This can become crucial

with programs doing things like playing a video, and

really, really crucial, if the program is monitoring the

state of a nuclear power plant or a patient's vital signs

in the hospital.

A process is a program in action.

How processes are created:

Technically, in all cases, 2 is how new processes

are created.

At boot time, a windowing system or command shell may be

started. But the system also starts daemons that listen on

ports for mail or web page requests, scan for viruses,

handle print requests, etc.

The book claims that the batch situation only applies on

mainframes. But UNIX has a cron facility for running batch

jobs, and Windows has a Task Scheduler.

UNIX: fork and exec pair start process. After fork,

the child is a copy of the parent. It can then manipulate

file handles, and then exec a new program in its process

space.

Windows: CreateProcess is the single call that

accomplishes both of the above tasks. Win32 has 100 other

calls for managing processes.

How processes terminate:

Very often, a process and because it has finished its work.

The user clicks "Exit" on a word processor, or a compiler

finishes compiling a program. In UNIX, the process executes

the exit system call, and on Windows,

ExitProcess.

The second case is really a special instance of the first:

if we try to compile foo.c and no such file exists, the

compiler exits with the complaint "No such file." but it

may set a different exit code.

In the third case, the process has done something naughty,

and the OS kills it: it tried an illegal instruction (e.g.,

switching into kernel mode without executing a system call)

or accessed memory not its own, or dividing by zero.

The fourth case is when one process kills another with a

system call and process ID. In UNIX, the call is

kill, and in Windows, TerminateProcess. In

neither case does this kill processes created by the murder

victim. (In some OSes it will.) The murderer needs the

right permissions, e.g., a user process can't kill a kernel

process, and a (non-root) user can't kill the process of

another user.

UNIX processes form a hierarchy: a parent spwans child

processes, which spawn grandchild processes, etc. For

instance, a signal may be sent to the whole process group.

Windows has no such process hierarchy.

In this case, we can usefully turn to the Wikipedia page on the process control block .

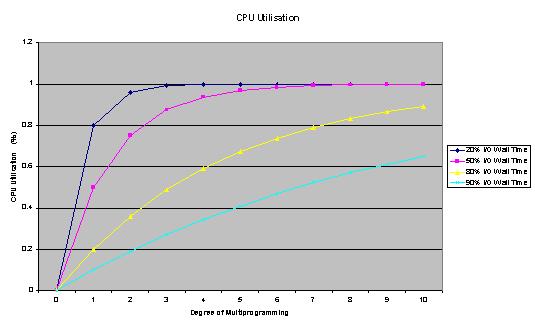

We look at CPU usage from a probabilistic viewpoint, and

try to see what the likely rate of CPU usage is. We

get a formula, where p is the fraction of time an

average process waits for I/O, and n is the number

of processes:

CPU utilization = 1 - pn