Hypervisors add a level of indirection to the domain

of computer hardware. They provide the abstraction of a

virtual machine: each one thinks if is "king of the

hill," and has a whole machine to itself. Ideally, the VMs

should be just like the emulated machine, as fast as the

emulated machine, and completely isolated from each other.

VMware had these goals (general to most virtualization):

There was tension between the requirements. E.g., total

compatibility might need to be sacrificed for performance.

But the designers held isolation as paramount.

The primary challenges were:

Possible approaches:

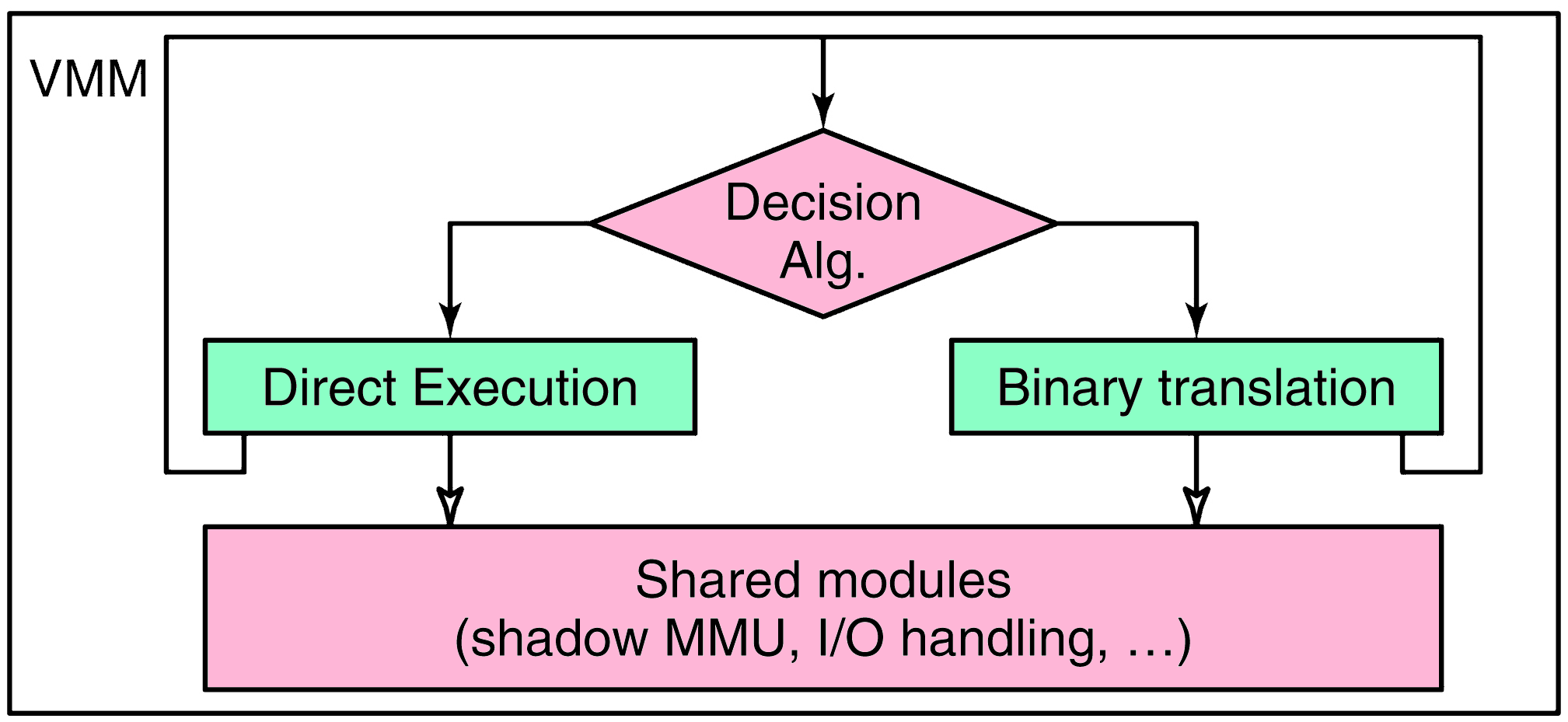

The solution:

Binary translation must be used if:

VMware can speed up binary translation to near-native

speeds because it sets the hardware to run the code

instead of translating it in software.

Runs at 80% of native speed, instead of 20%.

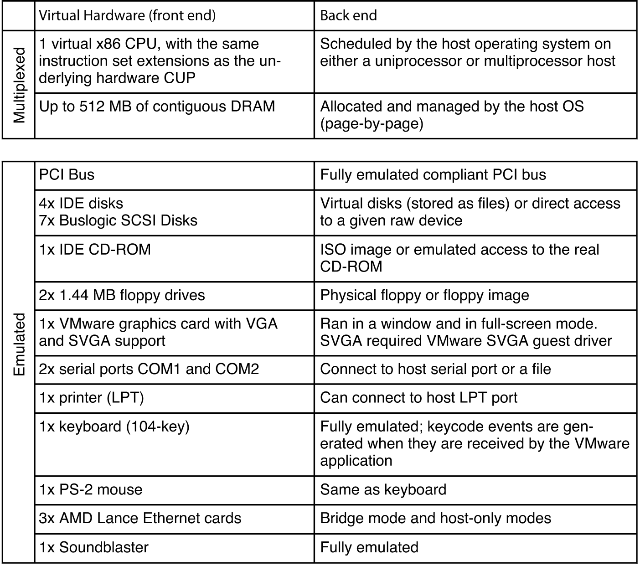

Two layers:

Example: the "Lance" 10-Mbps ethernet card.

VMware "supported" this card long after the real thing

was off the market, and eventually could run 10x

faster.

The actual hardware did not have to be what the guest

OS thought was there! It just talked to the VMware

drivers, and they could be coupled with different

back-ends.

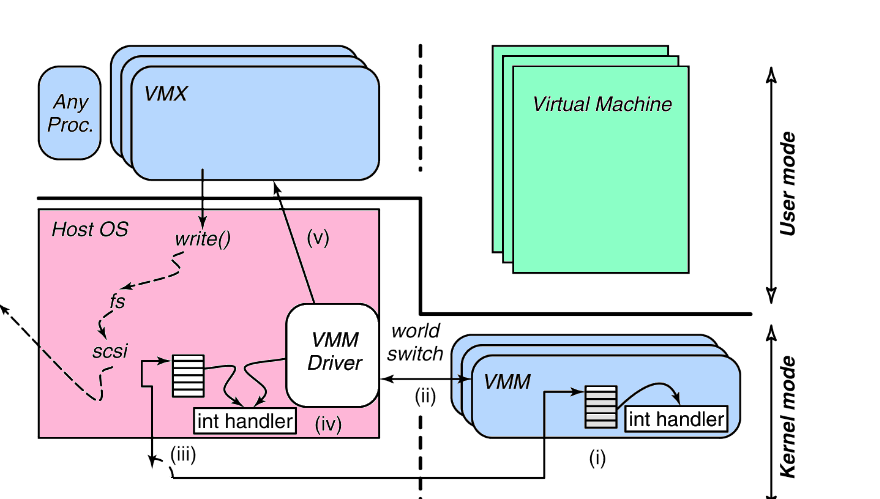

So, create three components:

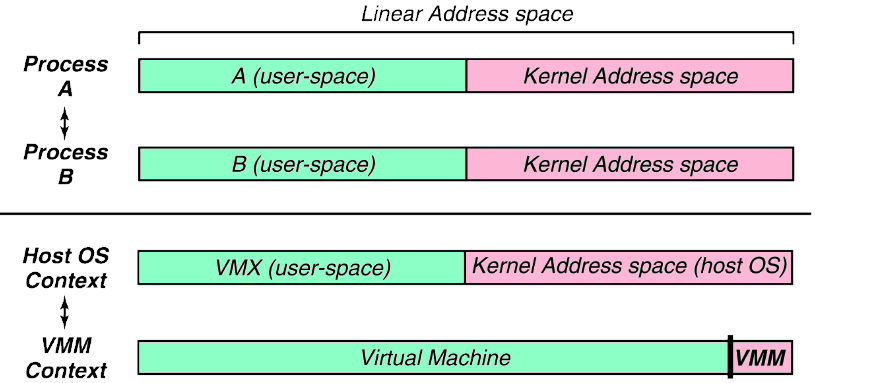

VMX runs as an OS process. But the VMM is a peer. The

VMX suspends the host OS and gives the VMM full control

of the machine. This is a world switch.

The VMM and the host OS have entirely

different address spaces.

Although earlier described as very time consuming, here

the book says the world switch only takes 45

instructions!

The VMM / hhost OS architecture remains the same.

But today, VMware Workstation can rely on:

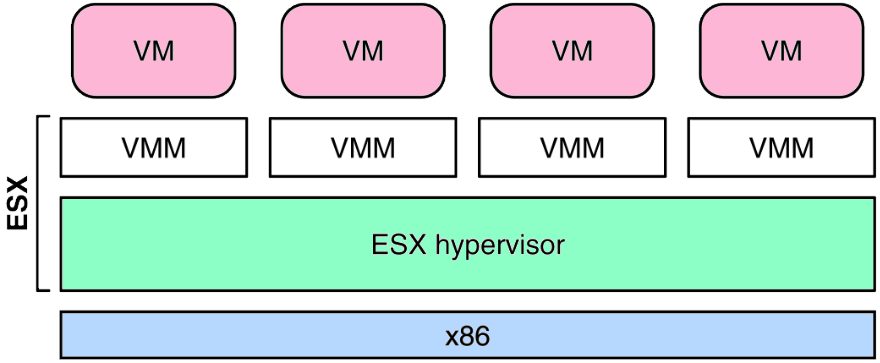

Not having a host OS to rely upon means ESX has more work to do than VMware Workstation. But in a situation where IT organizations are trying to run 1000s of virtual machines, a type 1 hypervisor makes sense: it will run significantly faster.

1. d; 2. b; 3. d; 4. a; 5. b; 6. d; 7. c; 8. a; 9. b; 10. d;